Integrating artificial intelligence into business operations promises tremendous benefits, yet the implementation reality tells a sobering story. A staggering 70-80% of AI projects fail to meet their objectives. Even more concerning, recent data shows the situation is worsening — the share of businesses scrapping most of their AI initiatives jumped to 42% in 2025, up from just 17% last year.

Behind these failures often lies a fundamental oversight: ineffective human involvement in AI systems. Human in the loop (HITL) — a technical safeguard and a strategic foundation for sustainable AI innovation — is about to address this challenge.

In this article, we explore how human expertise strengthens AI outcomes across its lifecycle, why HITL matters more than ever, and how organizations can implement it effectively.

Human in the Loop (HITL) Meaning — Beyond the Buzzword

Human in the Loop (HITL) is a design approach for AI systems that intentionally integrates human expertise at critical stages to enhance accuracy, reliability, and alignment with real-world needs. This collaborative framework operates across three key phases:

- Training. Humans curate and label datasets, correct model outputs, and refine algorithms during development. For example, data annotators tag medical images to train diagnostic AI, while engineers adjust misclassified data points (cases where an AI or machine learning model gives the wrong answer) to improve pattern recognition.

- Inference/Decision-Making. In many critical applications, AI systems suggest decisions or actions, but a human ultimately makes the final call or approves the AI’s recommendation before it is implemented. For instance, in healthcare, radiologists verify AI-identified tumors; in the legal industry, lawyers review and make a final judgment regarding documents that an AI model flagged with potential risks. This step prevents automated errors in evaluations.

- Feedback Loops. Humans correct model errors and update training data, creating iterative improvement cycles. Active learning systems, for instance, prioritize low-confidence predictions for human review, refining the AI’s understanding of edge cases over time.

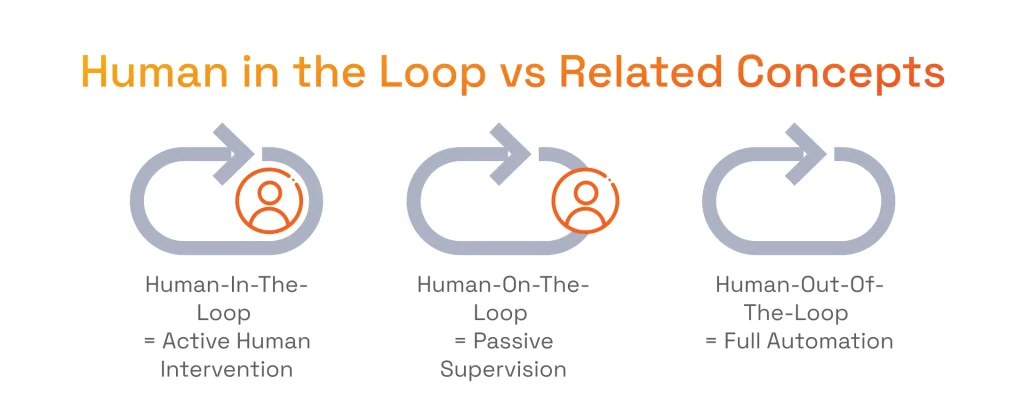

To avoid confusion, let’s clarify the differences between related but distinct approaches:

- Human-in-the-Loop (HITL): Direct human intervention in AI operations (e.g., approving loan decisions).

- Human-on-the-Loop (HOTL): Passive monitoring of autonomous systems, intervening only during anomalies (e.g., overseeing stock trading algorithms).

- Human-out-of-the-Loop: Full automation without oversight (e.g., real-time financial fraud detection).

By strategically deploying HITL, organizations balance AI scalability with human judgment. This approach ensures systems remain adaptable, ethical, and context-aware.

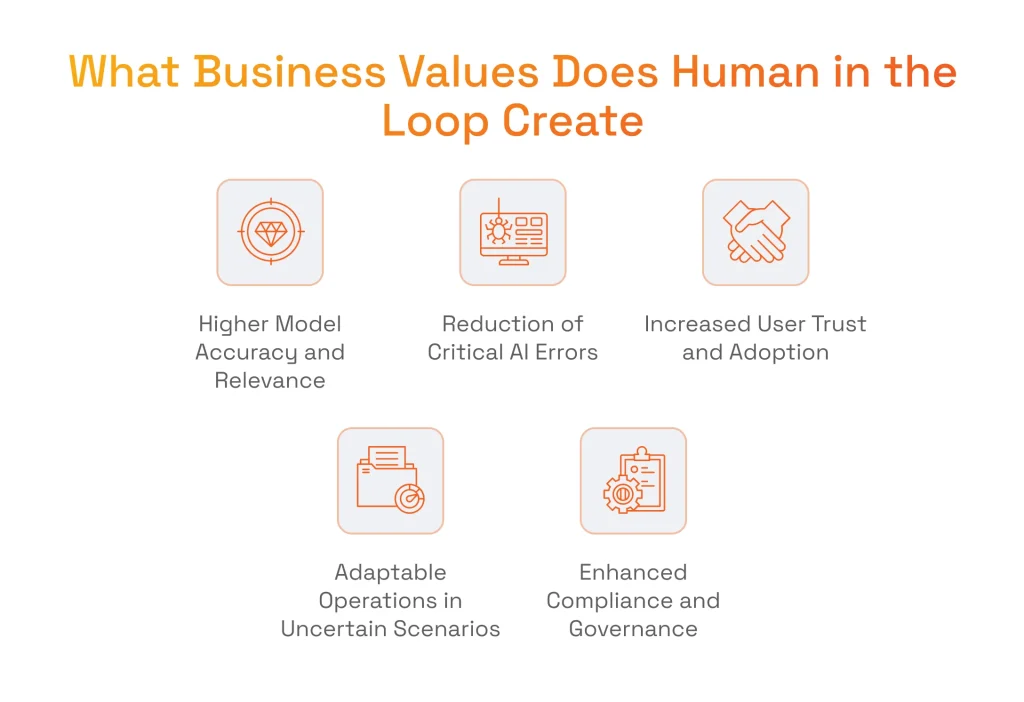

What Business Values Does Human in the Loop Create

The human-in-the-loop approach isn’t just a safety feature — it’s a strategic value driver that delivers measurable benefits for businesses that leverage AI. What specific benefits? Here’s a list of the essential ones.

Higher Model Accuracy with Real-World Relevance

Human in the loop automation implies that machine learning teams feed models with specific data during data labeling, model validation, or decision overrides to train algorithms, which provides nuanced context that automated training alone often misses. This work improves model accuracy and makes sure outputs align with actual business needs.

Such a continuous feedback loop between human judgment and machine learning creates a more accurate and consistent system over time and leads to fewer costly errors, better customer experiences, and models that adapt more reliably to changing conditions.

Fewer High-Stakes Errors and Failures

AI without human oversight can optimize for metrics but ignore human values, creating mistakes In high-risk environments, such as finance, healthcare, autonomous systems, or customer service, these mistakes lead to significant financial loss, legal exposure, or reputational damage.

HITL serves as a safety net in such situations as it introduces critical guardrails by involving humans in testing and evaluation to catch and correct errors. This is especially valuable when models lack enough training data or context.

Greater User Trust and Adoption

Without human oversight, guaranteeing accurate model outcomes is challenging. Models can struggle with explaining its results or navigating its reasoning to achieve the required values. HITL strengthens trust by making AI more transparent, human understandable, and aligned with real-world business needs.

Plus, when users know that expert oversight is part of the process, they’re more likely to feel confident using and relying on the system. This is especially true for highly regulated niches, like finance and healthcare, where understanding AI rationale is crucial for avoiding exposure to fraud, misdiagnosis, biased decisions, or compliance violations.

Operational Flexibility in Uncertain Scenarios

In any niche, there are scenarios where typical rule shifts and data patterns evolve. AI models often find these changes unpredictable, which makes them provide inaccurate results. HITL, in turn, allows human judgment to step in when models face uncertainty, incomplete data, or unfamiliar scenarios.

Plus, it introduces quicker responses to real-world variability. This collaboration between humans and AI enables organizations to preserve continuity, accuracy, and control whether it’s handling exceptions, adapting to market changes, or managing crises.

Stronger Compliance and Governance

In regulated industries, ensuring that AI systems operate within legal, ethical, and policy boundaries contributes to stronger compliance. While AI systems alone can not always offer 100% correct results and transparent outcomes, humans make AI-driven systems accountable for their decisions.

Human oversight checks regulatory standards such as GDPR, HIPAA, or the EU AI Act and ensures robust audit trails and documentation, making it easier to demonstrate accountability during reviews or investigations. These human-in-the-loop (HITL) features ensure AI delivers meaningful, responsible, and reliable value to businesses across complex and evolving environments.

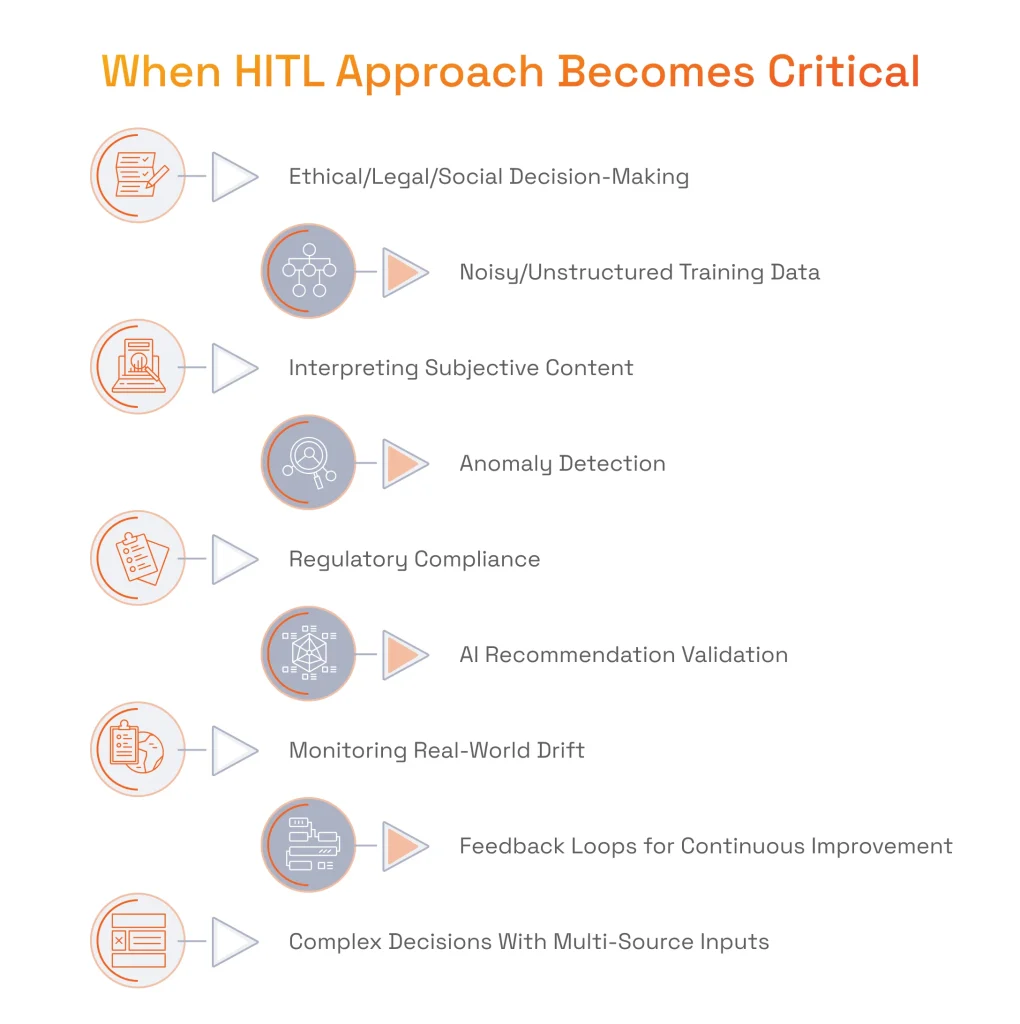

Cases Where Human in the Loop Approach Becomes Critical

Now that we understand the business value of human in the loop artificial intelligence, let’s reveal why this system design approach is vital.

When AI is involved in decisions that affect people’s lives — such as hiring, healthcare diagnostics, or legal risk assessments — human oversight is vital. These scenarios carry ethical and legal weight, where fairness, bias mitigation, and social responsibility come into play. Human-in-the-loop ensures that sensitive outcomes reflect not just data patterns, but human values and context.

Training Human in the Loop AI with Ambiguous, Unstructured, or Noisy Data

AI is only as good as the data it learns from — and early in development, that data is often messy. Humans must label, clean, and contextualize ambiguous or inconsistent inputs in fields like natural language processing or computer vision. Human-in-the-loop brings essential domain knowledge and judgment into the training loop, improving model quality from the ground up.

For example, we at SPD Technology, developed a computer vision powered app for seniors’ health and wellness that uses health data in different formats and patterns. To make the solution’s results precise, we collaborated with medical experts to label custom datasets for training of our AI/ML models. Further, we made sure that the data is accurate and reliable by conducting regular data quality reviews under the guidance of medical experts.

Leveraging AI, ML, Computer Vision, Retrieval-Augmented Generation, and Large Language Models to develop a web and mobile health and beauty monitoring app.

Interpreting Subjective Content or Tone

When AI systems analyze content filled with nuance — such as sarcasm, emotional tone, or culturally sensitive imagery — human interpretation becomes indispensable. Human-generated content, language, and visuals often carry meaning beyond what’s literal. Human-in-the-loop helps AI discern intent, sentiment, or offensiveness with greater accuracy, reducing misclassification and improving user experience.

Anomaly Detection in Critical Systems

In sectors like cybersecurity, finance, or industrial automation, rare anomalies may signal major threats or harmless glitches. Machines can flag outliers, but humans are needed to interpret them in context. Human-in-the-loop ensures that high-risk incidents are correctly prioritized, helping avoid costly disruptions while reducing false positives.

Ensuring Regulatory Compliance and Auditability

AI in regulated industries — from finance to healthcare — must follow strict rules. Human-in-the-loop enables humans to review, explain, and document decisions made by machines. This level of auditability is key for meeting legal standards, conducting internal reviews, and maintaining public trust, especially when automated systems operate at scale.

Validating AI Recommendations Before Execution

When AI generates recommendations (e.g., trade suggestions, medical treatments, policy flags) but a human must confirm or override before action is taken, human-in-the-loop ensures appropriate checks and balances. This is particularly important in high-stakes domains like healthcare, where AI may analyze medical images to aid diagnosis, but human doctors must verify AI-detected anomalies before determining treatment.

Monitoring System Drift or Real-World Changes

Markets shift, language evolves, and user behavior changes — often faster than AI models can adapt. Incorporating human-in-the-loop into the generative AI development process allows teams to spot data drift, detect when a model’s performance starts to degrade, investigate root causes, and trigger retraining.

Feedback Loops for Continuous Model Improvement

When human correction or reinforcement is required to fine-tune AI and adapt it to new or edge-case scenarios, human-in-the-loop creates a virtuous cycle of improvement. In human in the loop AI training, the testing and evaluation stage involves humans correcting any inaccurate results the machine produced, particularly where the algorithm lacks confidence. This active learning process constantly enhances the system’s performance.

Complex Multi-Source Decision Inputs

When decisions depend on a combination of structured data, human knowledge, and external context that AI cannot fully comprehend, human-in-the-loop bridges the gap. Humans can integrate information from multiple sources and apply contextual understanding that may be beyond the AI system’s capabilities.

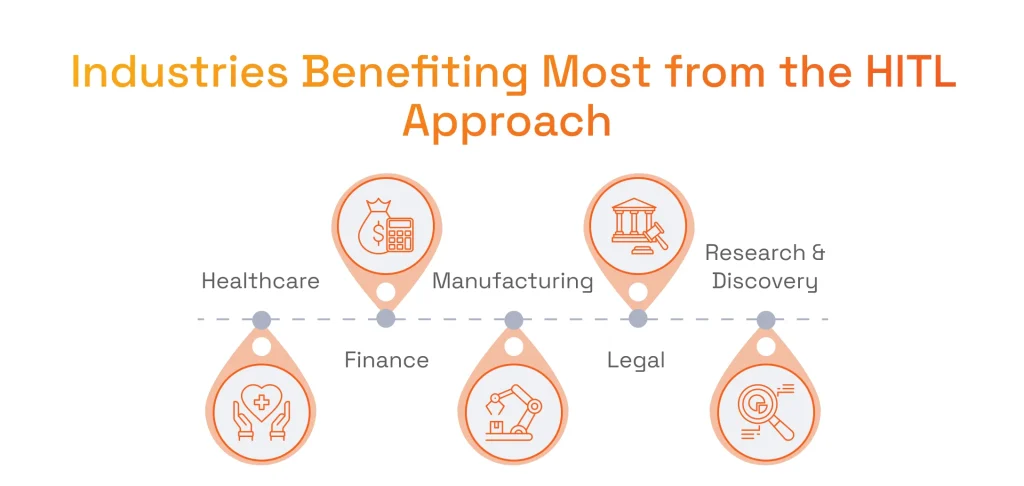

Human in the Loop (HITL): Meaning for Different Industries With Examples

In this section, we’ll provide examples of how different industries take advantage of human in the loop AI solutions.

Healthcare

In ophthalmology, AI assists in analyzing eye images to detect diseases such as diabetic retinopathy, glaucoma, and age-related macular degeneration. Ophthalmologists review and validate these AI-generated findings to ensure accurate diagnosis and treatment planning.

Besides medical imaging analytics, machine learning in healthcare helps doctors detect pathologies, execute genomic medicine and drug discovery, monitor remote patients with the help of AI and IoT, and much more.

Serhii Leleko

ML & AI Engineer at SPD Technology

“While working with data in the healthcare industry, it is always important to implement robust data governance policies to maintain patient data’s accuracy, completeness, and integrity. Ensure your organization adheres to strict data security and privacy regulations, such as HIPAA, to safeguard patient confidentiality and comply with legal requirements.”

Manufacturing

Computer vision systems can identify defects in quality control processes, but human inspectors verify critical or ambiguous cases. AI predictive data analytics services forecast potential equipment failures, but maintenance engineers validate these predictions and determine appropriate actions based on the broader operational context. Companies improve their operational efficiency by combining AI and machine learning in the manufacturing industry with the expertise of seasoned quality control professionals.

Legal

Let’s see how artificial intelligence transforms legal services. Contract analysis tools use AI to flag potential issues in legal documents, but lawyers review these findings and make final determinations. In legal research, AI can identify relevant precedents and statutes, but attorneys evaluate their applicability to specific cases. This partnership allows legal professionals to focus their expertise on interpretation and strategy while AI handles more routine document processing.

Finance

Using AI for financial technology companies allows the industry players to deploy fraud detection systems where AI flags suspicious transactions, but human analysts confirm whether these represent genuine fraud. AI algorithms may make initial creditworthiness assessments in credit underwriting, but human underwriters review edge cases or applications with unusual circumstances. This approach is critical given the risks to financial institutions, consumer protection, and financial stability.

Want to learn more about how AI impacts the financial industry in terms of risk management, fraud detection, portfolio management, and more?

Read our article on AI in investment banking.

Research & Scientific Discovery

In drug discovery, AI can identify promising compounds for further investigation, but scientists decide which to pursue based on additional knowledge about biological mechanisms. AI can highlight patterns or anomalies when analyzing research data, but researchers determine their significance and implications. This collaboration accelerates the research process while maintaining scientific severity.

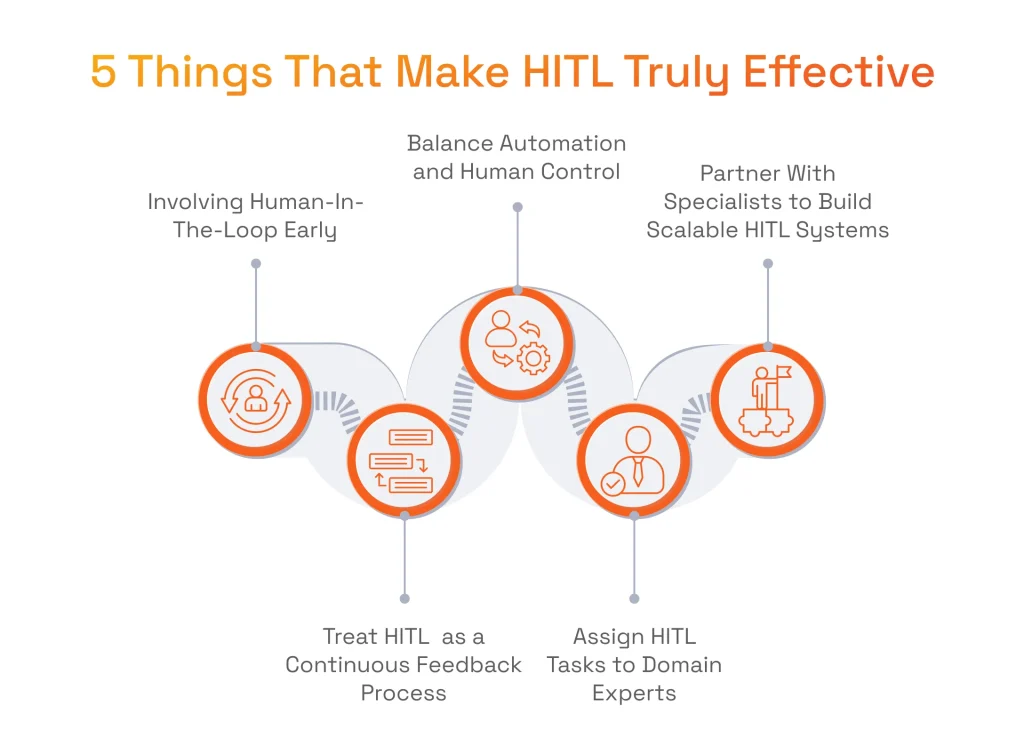

5 Things That Make Human-in-the-Loop (HITL) Truly Effective

Human-in-the-loop systems aren’t automatically effective just because a human is involved. Like any system, they must be thoughtfully designed, strategically timed, and continuously improved. Here’s what defines the ultimate result of AI human in the loop systems.

Incorporating HITL Early — Not After Mistakes Happen

The most successful human-in-the-loop implementations integrate human oversight from the beginning of AI development rather than retrofitting it after problems occur. By incorporating human judgment during initial data selection and labeling, organizations establish a strong foundation for their AI systems.

This proactive approach prevents the propagation of biases and errors that might otherwise become embedded in the system. Early integration of human-in-the-loop also helps shape the AI architecture to accommodate human intervention at appropriate points.

Treating Human-in-the-Loop (HITL) AI as a Continuous Process

Effective human-in-the-loop isn’t a one-time verification step but a continuous feedback loop where human insights constantly improve AI performance. The algorithm becomes more accurate and consistent as humans fine-tune the model’s responses to various cases.

This ongoing collaboration creates an environment where the system becomes increasingly sophisticated. Organizations should establish transparent processes for capturing human feedback, incorporating it into model retraining, and measuring the resulting improvements.

Synergy Human in the Loop Approach: Leveraging External Expertise

Many organizations require external expertise to build effective human-in-the-loop systems, especially when scaling beyond initial pilots. Partnerships with specialized tech vendors providing AI development services can accelerate implementation, avoid common pitfalls, and incorporate best practices across industries.

Serhii Leleko

ML & AI Engineer at SPD Technology

“While new product development companies may lack the established track record and in-depth domain expertise, seasoned ones have refined processes, proven methodologies, and a portfolio of successful launches that foster confidence in the product’s successful launch.”

Striking the Right Balance Between Automation and Human Oversight

Finding the optimal division of labor between humans and AI is crucial. Too much human intervention creates bottlenecks and limits scalability; too little risks errors and missed context.

The best implementations automate routine tasks while reserving human judgment for complex or high-stakes decisions. This balance should evolve as AI capabilities mature and confidence in specific functions increases. Assessing where human intervention adds the most value helps organizations optimize resource allocation.

Involving the Right Experts — Not Just Any Human in the Loop

The value of human-in-the-loop depends heavily on the quality of human judgment involved. Subject-matter experts who understand the domain and the AI’s capabilities provide more valuable guidance than general reviewers. Many organizations lack in-house expertise to effectively supervise AI systems, making it worthwhile to consider partnering with a tech vendor. Collaborating with external experts can help bridge the gap between technical capabilities and domain-specific needs and deliver the strongest results.

Conclusion

HITL AI exists because the reality is clear: not all tasks can or should be fully automated. The optimal solution combines AI’s computational power and scalability with human experts’ judgment, creativity, and ethical reasoning in many contexts.

Custom AI solutions allow companies to get the most out of this system design approach. Thus, it’s essential to partner with tech vendors having in-depth expertise in your business domain. We, at SPD Technology, help many clients to identify the most practical, scalable, and cost-effective way to integrate human insight into their AI project. If you need a hand, you can contact us, and we will apply our expertise to your specific needs.

FAQ

What are the limitations of HITL?

Human-in-the-Loop systems can be costly and time-consuming due to the need for continuous human involvement. They face scalability challenges as increasing data volume and task complexity demand more human resources, which can become bottlenecks. Additionally, human judgment is subject to biases and errors, which may affect AI outcomes and system stability.

How is HITL different from full automation?

Speaking of HITL meaning: it’s when an AI system integrates human input at critical points to guide, correct, or validate AI decisions, ensuring higher accuracy and ethical oversight. In contrast, full automation (human-out-of-the-loop) operates independently without human intervention during execution, prioritizing speed and scale but potentially lacking adaptability and accountability.

Can HITL be used in real-time systems?

Yes, HITL can be applied in real-time systems, but it requires carefully designed workflows to balance human review with system responsiveness. For time-sensitive decisions, humans may oversee AI outputs and intervene only when necessary, ensuring safety without causing unacceptable delays.