Data is omnipresent, and its amount grows exponentially every day. According to Statista, the total amount of data created worldwide is anticipated to grow from 64.2 zettabytes in 2020 to over 180 zettabytes by 2025. This reflects the increasing demand for storage capacity, which is also expected to grow at a CAGR of 19.2% in the same period of time.

In the search for optimal storage solutions, businesses often come across the comparison of data warehouse vs data lake. To better understand which one is suitable for specific business needs, it is essential to explore the distinctions between these two systems and their comparison to other storage solutions, along with their use cases, benefits, and risks. This article delves into all these aspects in detail.

What is a Data Lake?

Understanding the difference between a data lake and a data warehouse lies in defining their natures, specific, and characteristics of each.

First, let’s answer the question “What is a data lake?” It is a centralized storage repository that stores large amounts of raw data. It is capable of handling different types of data, including structured, semi-structured, and unstructured data. This is why a business can rely on data lakes to store text, images, videos, log files, and any other information.

Here are the defining characteristics of a data lake:

- Raw Data Storage: data lakes support different types of data formats and can accommodate different information sources without data transformation.

- Schema-on-Read: It does not need to process or organize data format before storage, as it can ingest data as-is.

- Optimized for Scalability: Lakes can be scaled to accommodate petabytes of data or can be even set up as a cloud data lake for avoiding capacity constraints.

What is a Data Warehouse?

By contrast, a data warehouse is a centralized repository for storing structured data only. It accepts data from diverse sources, including CRM, ERP, POS, eCommerce platforms, and operations systems. A warehouse promotes a structured approach to data storage that contributes to seamless reporting, business intelligence (BI), and data analytics functionalities.

Here is what defines a data warehouse:

- Structured Data Storage: Even though the data in warehouses is derived from different sources, it is consistent thanks to a structured approach.

- Schema-on-Write: If the information comes from sources in different formats, it requires cleaning, transforming, and organizing before feeding into a warehouse.

- Optimized for Query Performance: Data warehouses are designed for fast querying and retrieval of data and often include features like indexing and materialized views.

Dmytro Tymofiiev

Delivery Manager at SPD Technology

“Data lakes are ideal for healthcare and retail, where handling large volumes of diverse data for ML, Data Science, and Big Data analytics is a cornerstone of operations. Conversely, data warehouses are best suited for finance, telecommunications, and eCommerce, where structured data makes business systems support BI, reporting, and historical analysis.”

Difference Between Data Lake and Data Warehouse

For a clearer illustration, here is the comparison of both storage systems side-by-side:

| Characteristic | Data Lake | Data Warehouse |

| Purpose | Storage of raw, unstructured, semi-structured, and structured data. | Structured storage for processed, organized data ready for analysis. |

| Data Structure | Schema-on-read: data stored in its original format, organized upon access. | Schema-on-write: data is cleaned, transformed, and structured before loading. |

| Typical Use Cases | Big Data, advanced analytics, ML, diverse data types. | Operational reporting, KPIs, historical analysis, BI. |

| Flexibility/Performance | Ideal for experimentation, exploration, and complex data types. | Optimized for fast querying and high-performance structured data analysis. |

Data Warehouse vs Data Mart

There are also other solutions for storing data that may be more suitable for specific business objectives. One of them is a data mart, that is a subsection of a data warehouse that focuses on specific business units within a company. As opposed to data warehouses that serve organization-wide, data marts can be used particularly for the needs of finance, sales, marketing, or human resources to ensure these departments have a quicker access to the necessary information for reporting and/or analysis.

To better understand the difference between a data warehouse vs. data mart, here are the key properties of the latter:

- Department-Specific: While data warehouses deal with data from all the sources across the company, data marts handle data only from specific departments for ensuring relevance.

- Faster Access: Unlike a data warehouse that processes queries longer, a data mart can fetch necessary data much faster due to lesser amount of data stored.

- Optimized for Targeted Analytics: Data marts are capable of providing focused analytics that addresses department-specific tasks, while data warehouses are designed for broader analytical needs.

Data Lake vs Data Warehouse vs Database

Another storage solution is a database that acts as a general-purpose system for handling structured data only. The information stored in a database is optimized for the overarching system to easily retrieve and use it for day-to-day operations. Unlike data warehouses or data lakes, databases are specifically used for real-time transactional processing (OLTP) as their nature allows for quick access and updates.

For a clearer perception of differences between a data warehouse vs data lake vs database, here’s the breakdown of the essential features of a database:

- Structured Data Storage: Databases are ideal for structured data since they store information in organized tables with predefined schemas unlike data lakes.

- Optimized for OLTP: While data lakes and warehouses are most suitable for OLAP, databases are built for OLTP, which unlocks quick data insertion, updates, and retrieval of individual records.

- High-Speed Operations: Databases are used for fast operations like instant searching, retrieving, and modifying small amounts of data, while data lakes usually prioritize storage and exploration of information.

- Ensured Data Consistency: Databases adhere to ACID principles (Atomicity, Consistency, Isolation, Durability) to guarantee data integrity. This differs from data lakes, which favor flexibility over consistency.

- Focused Scope on Operational Data: Databases are optimized for managing current, operational data rather than the historical or large-scale analytical datasets typically handled by data warehouses and data lakes.

What Is a Lakehouse?

A lakehouse is a modern data architecture that combines the strengths of data lakes and data warehouses. Just like data lakes, it stores data of different formats (structures, semi-structured, and unstructured), and just like data warehouses, it is focused on supporting the performance, reliability, and analytics capabilities.

Here are the key characteristics to better answer the question “What is a lakehouse?”:

- Unified Architecture: A lakehouse acts as a single system to manage all types of data and workloads, eliminating the need to move data between lakes and warehouses.

- Schema Flexibility: Like a data lake, it allows schema-on-read for flexibility, but it also incorporates typical data warehouses schema-on-write capabilities for structured data.

- Support for BI and AI: It supports both BI for operational reporting and ML/AI workloads for advanced analytics.

Data Lakehouse vs Data Warehouse

Even though a data lakehouse has a lot in common with a data warehouse, it still has its unique sides. Their similarities include support for structured data, schema-on-write approach to storage, and prioritizing analytics and reporting. Moreover, both storage systems are designed to focus on data governance, security, and reliability for maintaining consistent information.

The difference between data lakehouse vs data warehouse, on the other hand, lies in the fact that lakehouse’s capabilities surpass warehouse’s by dealing with a broader range of data types, supporting AI/ML development, Big Data processing, and advanced analytics.

Data Lake vs Data Lakehouse

Just like with warehouses, lakehouses share both similarities and distinctions with data lakes. Both storage repositories handle different data formats and promote the storage of diverse datasets in a single place. Also, they both support the schema-on-read approach.

However, data lakehouses go even further and integrate schema-on-write, ACID transactions, and query optimization. In this manner, a lakehouse is capable of promoting performance and reliability of a warehouse with the flexibility of a lake.

Data Warehouse vs Data Lake vs Data Lakehouse

Despite the seemingly winning concept, a data lakehouse isn’t a one-suit-all solution. The effectiveness of this repository depends on the unique data needs, existing infrastructure, and goals. By combining strengths of lakes and warehouses, lakehouses come up as an intricate venture to implement. Plus, for some projects, choosing a lakehouse can be overkill.

For example, a lakehouse wouldn’t be the best choice for the companies that require immediate processing and response times, those operating in highly regulated industries (like healthcare or finance) or those which have established infrastructure (since implementing a lakehouse might require significant changes and resource investment).

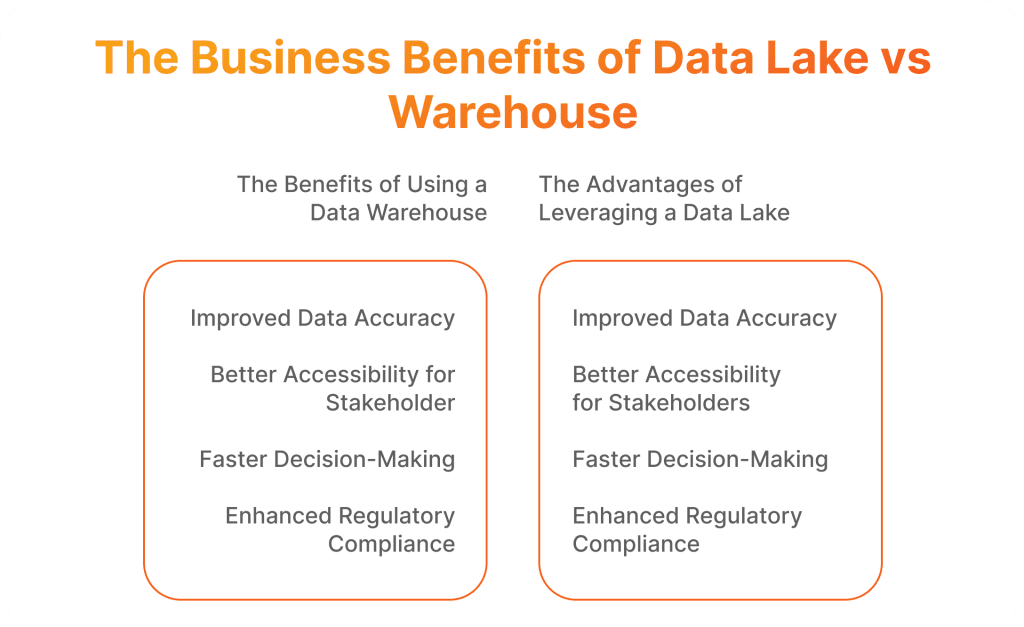

The Business Benefits of Data Lake vs Warehouse

Having a robust and scalable data storage in place contributes to a solid and trusted data foundation, which 84% of companies lack nowadays as indicated by Accenture. The remaining 16% are more likely to leverage a data lake and a data warehouse and, thus, get access to multiple benefits they offer.

The Benefits of Using a Data Warehouse

As data warehouses require data cleansing and validation before storage, the information inside is always high-quality and accurate. Moreover, this data is well-organized as data warehouses are centralized, structured environments. Thanks to such a streamlined nature of these systems, the company’s stakeholders get fast access to critical and relevant information and rely on the insights they derive from that information. As a result, the organization decisions are driven by data.

All mentioned properties of a data warehouse alongside its built-in tools to track data lineage and audit trails help with adhering to compliance regulations. They contribute to data transparency and accountability, and ensure that data is accurately tracked, changes are documented, and access is monitored, which is essential for meeting regulatory requirements.

Need to find out about more data warehouse characteristics?

Read our article about data warehouse design.

The Advantages of Leveraging a Data Lake

Since data lakes handle various data types and structures, it is much easier for companies to adapt to new data requirements when they arise. Unlike data warehouses that often require considerable reconfiguration and schema adjustments to manage changes, data lakes don’t require predefined structures and this is why they easily integrate new data sources. Furthermore, they can be scaled horizontally, which allows handling as much data as the company may need and still have high-performing systems.

Due to such a versatile and flexible nature, data lakes facilitate advanced analytics and machine learning capabilities. This paves the way for analyzing patterns and trends in data. In this manner, it becomes much easier to adjust to market conditions, demand, and changing customer preferences. The result is an enhanced market responsiveness and increased competitive advantage.

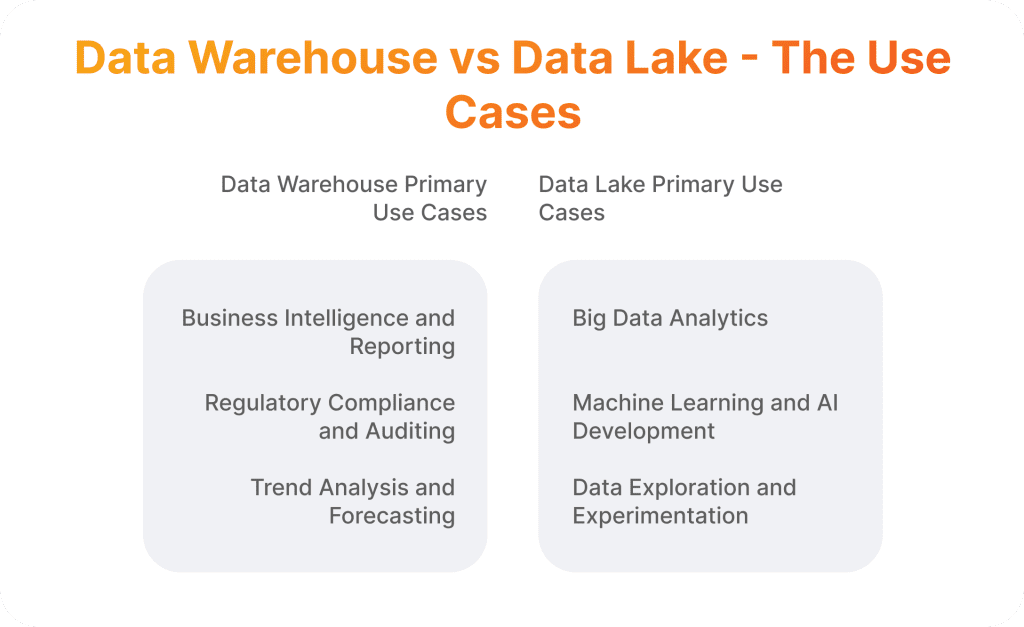

Data Warehouse vs Data Lake: Use Cases

The right choice between data lake vs warehouse directly depends on the use case the company needs to implement. Let’s compare the use cases of each.

Data Warehouse Primary Use Cases

The use of data warehouses is usually dictated by the need for defensive data management strategies. They offer clean, structured, and trusted data that supports informational quality, regulatory compliance and security measures.

Business Intelligence and Reporting

Thanks to the consolidation of organized data from different sources, data warehouses allow companies to create dashboards and reports that provide error-free and reliable information. This is especially advantageous for large companies, when an enterprise data warehouse plays a crucial role in detecting trends, evaluating performance, and analyzing different metrics, including KPIs.

In such a manner, enterprises obtain a big picture of the company’s productivity and capabilities presented in reports or visual analytics. Such a use case may be needed for financial companies to check the transactional data and sales and marketing departments to analyze campaign results.

Regulatory Compliance and Auditing

Data warehouses help companies to stick to legal requirements, such as GDPR or PCI DSS because they guarantee the data is consistent and easy to trace. This is possible because of data lineage and audit trails that promote straightforward verification of who accesses the data, what changes are done to it and when it happens. Such a level of accountability is a must for avoiding risks during regulatory review in such highly regulated fields as finance. In addition, since warehouses store historical data, all the critical records can be provided to regulators to clear out various legal requests.

Trend Analysis and Forecasting

Because of storing historical data for a long period of time, data warehouses can promote the use of predictive analytics. The abundance of past information hides specific patterns and trends, which can be uncovered and used for forecasting future outcomes and strategic planning. This is especially helpful for retail, as companies can anticipate future inventory requirements. Also, this is beneficial for marketing in any industry, when promo campaign planning can be based on demand or seasonality.

Want to know how to build a data warehouse to equip your systems with BI, reporting, forecasting, and trend analysis?

Find our answer for your question in this article!

Data Lake Primary Use Cases

The need for a data lake typically arises when the company needs to follow an offensive data management strategy. Dealing with large volumes of diverse data types from multiple sources allows for insights and innovation through predictive modeling and ML with flexibility and the ability to scale.

Big Data Analytics

Data lakes are well-known for their ability to handle a lot of heterogeneous data. They can store information taken from IoT devices, social media, or logs and provide healthcare, eCommerce, and manufacturing companies with the opportunity to use this data for analysis. The best part is that data lakes do not impose any restrictions on data type or format, which means the analysis will be in-depth, all-encompassing, and adaptable as new information is fed to them.

Another advantage is that data lakes work smoothly with such tools as Apache Spark and Hadoop that allows for advanced analytics, specifically real-time data processing, predictive modeling, and ML, within the data lake environment. This flexibility and analytical power amplify the business impact of Big Data by driving innovation across industries.

Machine Learning and AI Development

The versatility and abundance of raw data stored in data lakes makes it possible for companies to train ML models on text files, images, videos, etc. Businesses can experiment with ML models and try different algorithms to fine-tune AI systems to specific needs and objectives.

For example, healthcare companies can train ML models on data from IoT sensors and medical history to detect health issues and fine-tune medical recommendation functionality. Our team delivered such functionalities for our B2C healthcare client, achieving 90% accuracy in identifying facial imperfections. This use case is also suitable for retail companies that can create precise algorithms for recommendation systems by feeding information about past purchases, demands, and customer feedback into data lakes and, thus, into ML models.

Data Exploration and Experimentation

Data lakes encourage data exploration thanks to providing a centralized repository for all types of data without imposing predefined schemas. Thus, it becomes possible to explore raw datasets to identify trends, correlations, or anomalies. On top of that, data lakes provide access to historical raw data, which gives way for analyzing past patterns and uncovering hidden insights in them. These two data lake features can be useful for BioTech businesses for experimenting with lab data and finding new formulas.

Data Warehouse vs Data Lake: When to Choose Each of the Solutions?

The difference between a data warehouse and a data lake becomes more evident when a company starts to try scenarios for implementation. Below are some exemplary cases when it is more appropriate to use one or another.

Choose a Data Warehouse When:

- The organization has stable data model needs: Thanks to a structured architecture of a data warehouse, your system will have a consistent and reliable storage that is also optimized for fast querying.

- The company operates in a highly regulated industry: Warehouses make it easier to comply with regulations and maintain data governance since they possess auditing, security, and governance capabilities.

- The business relies on consistent reporting and analytics: Warehouses are known for providing clean and standardized data, which fuels visualization, reporting, and tracking KPIs.

- The organization requires data integration from multiple sources: Any data warehouse acts as a central hub for information, that is why it is just natural for this repository to consolidate data from CRM, ERP, and other applications or platforms.

- The organization regularly runs complex queries across large datasets: Warehouses ensure fast response times for complex queries, therefore it can accommodate performing aggregations, joins, or filters across extensive datasets.

- The business needs long-term data storage for compliance and analysis: Due to its effective management and retrieval of historical data, a data warehouse ensures long-term trend forecasting, auditing, and compliance reporting.

Choose a Data Lake When:

- The organization deals with diverse data types: Businesses that generate and analyze transactional records, IoT sensor streams, images, or videos can benefit from the data lake’s capability to integrate diverse data sources without extensive preprocessing.

- The company requires scalability for large volumes of data: For organizations expecting rapid data growth, a data lake can easily expand to accommodate petabytes of data without the need for costly infrastructure upgrades.

- The organization needs to conduct advanced analytics and data exploration: Data lakes enable swift access to raw data for predictive modeling and ML, which allows uncovering new patterns that traditional systems often overlook.

- The company seeks to store data for long-term analysis without immediate transformation: Since data lakes do not require changing data format for storage, historical data stored in them remains accessible over time, which can be beneficial in case of compliance checks.

- The organization aims to leverage big data technologies: Businesses looking to implement Big Data analytics (for fraud detection or personalized recommendations) workflows rely on data lakes for ingesting, processing, and analyzing datasets.

- The company wants to enhance real-time data processing capabilities: Organizations can act immediately on time-sensitive insights as data lakes allow for ingesting streaming data and performing analytics on the fly.

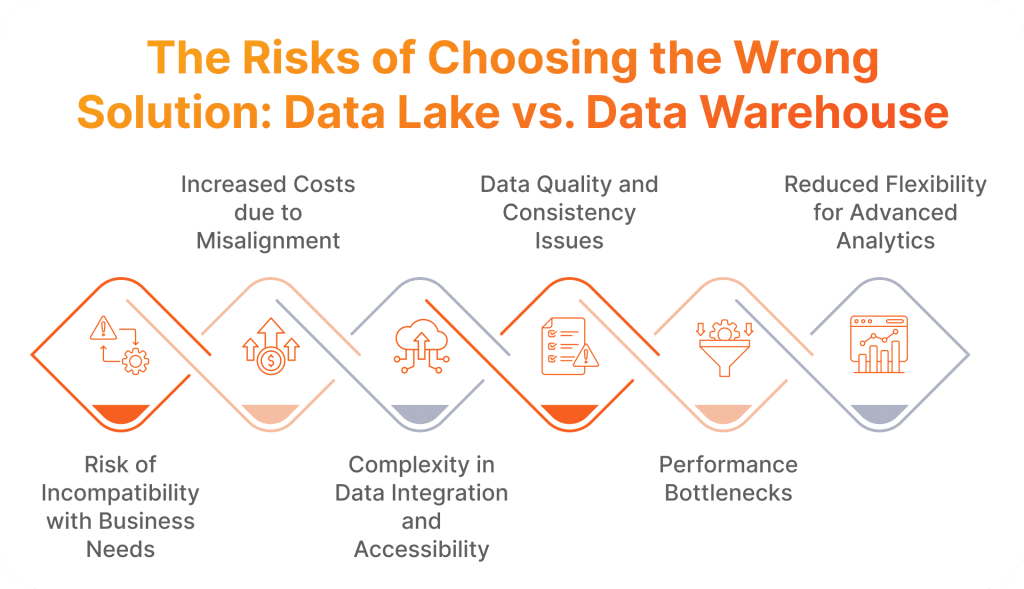

The Risks of Making Wrong Choice Between Data Lake and Data Warehouse and How We Mitigate Them

A careful approach to a data storage selection can help avoid multiple risks, saving time and money. We solved multiple challenges during project developments for our clients and now share how it is possible to mitigate them.

Risk of Incompatibility with Business Needs

Choosing a data lake where a data warehouse is more suitable and vice versa can make the project stall. For example, if you decide to choose a data lake for transactional analytics, you may face complex data retrieval and slow query performance. Conversely, forcing a data warehouse to perform exploratory or experimental tasks with unstructured data may restrict flexibility.

In order to avoid such misalignment in terms of system capabilities and business objectives, we conduct in-depth workshops for requirement analysis, together with our customers. Our team gathers business stakeholders from operations, sales, marketing, and other departments to align on the types of data, user needs, and analytics objectives before opting for either a data lake or a data warehouse.

Increased Costs due to Misalignment

In case a business chooses to use a Data Warehouse without genuinely requiring its structured nature or a data lake without the need to deal with raw data, a great deal of infrastructure remains unutilized. That means you pay for what you do not benefit from. As McKinsey indicates, managing and maintaining disjointed and fragmented repositories can consume 15 to 20% of a typical IT budget.

Before starting the project, our team conducts a cost-benefit analysis focused on data growth trends, storage capacity requirements, and compute power demands. This allows us to identify the most cost-effective architectures for data, which will address your unique needs. Moreover, in this manner we ensure that the solution will be capable of scaling once your organization grows.

Complexity in Data Integration and Accessibility

The importance of data integration cannot be overstated when choosing a storage solution, as its incorrect choice causes siloed data and accessibility challenges that slow down not only decision-making but standard operations overall. For example, a complicated integration of new data sources in data warehouses may limit access to critical data, or deploying a data lake without robust governance mechanisms can result in a disorganized repository.

To make integrations seamless, we access the current data setup, identify integration points and find potential complexities. Then, with the help of real-time data integration frameworks, our team builds customized solutions, like middleware or APIs, to make a flow of data across departments as smooth and uninterrupted as possible.

Data Quality and Consistency Issues

The way data is stored, managed, and accessed affects its accuracy, consistency, and usability. Data lakes can become “data swamps” if governance is weak, while data warehouses may constrain data formats, which often leads to data loss. All that will require significant reinvestments. As revealed by Gartner, poor quality of data costs companies around $15 million per year.

From the very start of the project, our data team employs strict governance procedures and data quality management. We maintain high data integrity in both data lakes and data warehouses thanks to the use of automated data cleaning and validation procedures, metadata management, and user access controls.

Performance Bottlenecks

When companies decide to use data lakes for frequent and complex queries, they will face inefficient data retrieval, inconsistent query results or other performance issues. The same with data warehouses: when businesses want them to do large-scale data processing or mixed workloads with different data formats, slower query response times, inefficient resource utilization may arise as a result.

To address these challenges, our technical teams perform an analysis of anticipated data volumes, query complexity, and workload patterns to implement relevant optimization strategies. For data warehouses, we employ indexing, partitioning, and materialized views to improve query speed and reduce processing overhead. In the case of data lakes, we leverage caching mechanisms and distributed processing frameworks.

Reduced Flexibility for Advanced Analytics

An organization’s capacity to carry out sophisticated analytics may be severely restricted by the choice of data architecture. For instance, data warehouses are not made to manage text, photos, or streams from IoT sensors at once, while data lakes are not optimized to handle high-speed searching. Making these systems do what they are not designed for leads to discrepancy and postponed decisions.

When companies need the capabilities of both systems, we usually offer them to try a hybrid dissolution, particularly a lakehouse. Its architecture is characterized by the scalability and flexibility of a data lake, and the speed and reliability of a data warehouse.

Dmytro Tymofiiev

Delivery Manager at SPD Technology

“Data warehouses are perfect for fast analytics, while data lakes are useful for advanced analytics and ML. Nevertheless, they can also be used together. In that case, they complement each other in the hybrid solution called a lakehouse, addressing both operational and exploratory needs.”

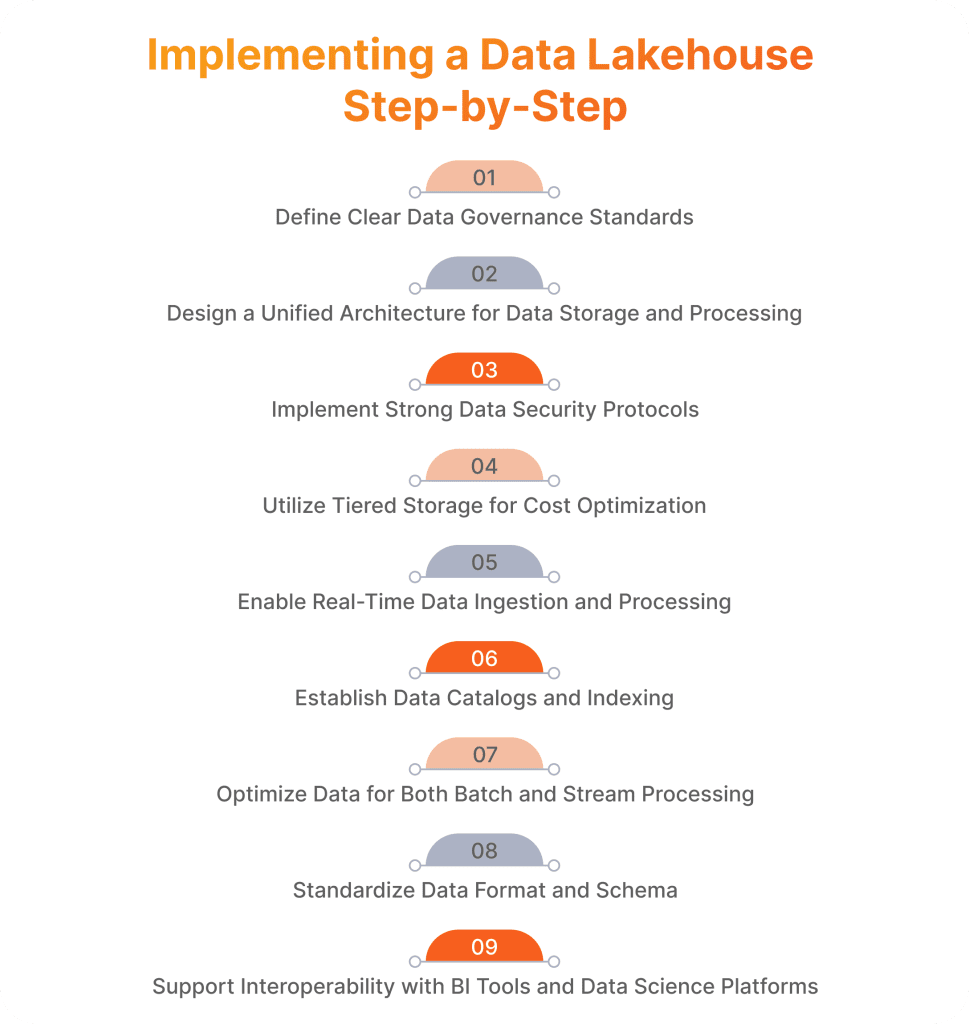

Combining Both: The Data Lakehouse Approach and Practices We Follow to Implement It Effectively

In the comparison of a data warehouse vs data lake vs data lakehouse, the latter seems to surpass, as it combines the best from both other solutions. However, it still should be chosen strategically. After making sure that this solution is the right fit for your organization, you have to implement it correctly, following proven and tested practices we share below.

1. Define Clear Data Governance Standards

A lakehouse ruled by clear data governance principles makes sure that data stored is accurate, consistent, and accessible. To achieve having data that is well-organized and corresponds to compliance requirements across raw and structured layers, we design a custom governance frameworks, where we include:

- Metadata management;

- Access controls;

- Quality checks.

2. Design a Unified Architecture for Data Storage and Processing

We bridge the gap between data lakes and data warehouses by making the system handle structured and unstructured data. For that we design a unified architecture that can be cloud-native to include cloud computing infrastructure benefits or hybrid solutions to preserve the advantage of on-premise solutions. Moreover, we set up a lakehouse architecture thanks to:

- Developing robust ETL processes;

- Automating data transformation;

- Implementing unified query engines;

- Using metadata catalogs.

3. Implement Strong Data Security Protocols

We protect customer information from breaches or leaks, thanks to the implementation of security protocols. This not only grants peace of mind for system users but also ensures compliance with data regulations such as GDPR or HIPAA. For that, our team pays particular attention to:

- Multi-level encryption;

- User access management;

- Audit trails.

4. Utilize Tiered Storage for Cost Optimization

We balance performance and cost-efficiency for companies, and organizing data across different storage tiers based on its usage patterns helps us with that. We divide data on hot, warm, and cold, depending on how frequently it is used. In such a way, we allow rapid access to frequently used data, and cost-effectively store rarely used data in cloud object storage or tape archives. To ensure that, we set up:

- Data categorization;

- Optimized deployment;

- Automated tiering.

5. Enable Real-Time Data Ingestion and Processing

A lakehouse must support up-to-the-minute analytics, so we implement real-time data ingestion for it. In this way, we help businesses to cover such functionalities as fraud detection, real-time inventory optimization, or live customer monitoring. To accomplish this, we:

- Build scalable pipelines;

- Employ real-time ETL processes;

- Perform integration with Lakehouse systems;

- Make systems support real-time analytics.

6. Establish Data Catalogs and Indexing

The data in a lakehouse must be located easily as well as understandable and accessible for users. Therefore, we take care of the creation of a searchable repository of metadata, which describes datasets, their sources, and their relationships. This is achieved thanks to the implementation of:

- Automated data catalogs;

- Searchable indexing;

- Data documentation;

- Access controls and permissions.

7. Optimize Data for Both Batch and Stream Processing

To make a lakehouse work with both historical and real-time data, our team prioritizes batch and stream processing. This provides the systems with flexibility in data analytics, ensuring the capability for analyzing both long-term trends and patterns and brand new information that unfolds live. We make this possible thanks to:

- Seamless integration of batch and stream tools;

- Customizable workflows;

- Real-time and historical data synergy (with tools like Delta Lake or Apache Hudi).

8. Standardize Data Format and Schema

To guarantee that data from many sources is compatible with analytics tools and can be incorporated into a single system, standardizing data formats and schemas is crucial. We prioritize elaborating a consistent schema to remove inconsistencies, promote seamless data intake, and expedites processing. We do this by:

- Combining schema-on-read and schema-on-write practices;

- Metadata management.

9. Support Interoperability with BI Tools and Data Science Platforms

As an essential part of lakehouse development, we enable smooth integration for teams across the organization to work within their preferred analytics environments. With this, companies can fully leverage their existing toolsets while benefiting from the scalability and flexibility of the new architecture. To unlock this possibility, we:

- Configure lakehouse architectures to integrate with BI tools and ML frameworks;

- Ensure unified query interfaces.

Why You Should Opt for Professional Data Warehouse Consulting

When deciding between data lakes vs data warehouses, consulting data professionals is the smartest choice. The expert team provides insight into how to align the storage solution with your organization’s specific goals, workloads, and data types, which is one of the advantages of strategic technology consulting, when deciding on a suitable storage solution. Other benefits include:

- Tailored Needs Assessment: Data experts assess the data structure within your company and evaluate data volume, analytics goals. In this manner, it is possible to determine what suits your goals best: data lake vs data warehouse.

- Clarity on Technical Differences: Professionals elaborate the differences between these two solutions and explain how each handles different data formats to accommodate diverse business requirements.

- Mitigation of Common Pitfalls: Consultants help you avoid challenges like incompatibility with business requirements, increased costs, integration complexities, problems with data quality, scalability and flexibility.

- Best Practices in Data Governance and Security: Specialists offer you guidance on implementing governance and security for your solution of choice with particular consideration of access controls for warehouses or metadata management for lakes.

- Efficient Scaling Guidance: Experts ensure your storage solution can grow alongside your organization’s increasing data and analytics demands.

- Flexible Recommendations for Phased Implementation: Data specialists develop strategies to implement the data storage system step by step to guarantee smooth integration and minimum disruptions.

Consider SPD Technology for Data Warehouse Consulting Services

When deciding between a data lake or a data warehouse, our team offers a precisely tailored guidance with:

- Client-Centered, Consultative Approach: We begin the work on the project by understanding your goals, challenges, and existing data setup. During a detailed discussion with you, we chose a solution based on your business needs, whether you’re focused on analytics, scalability, or cost efficiency.

- Expertise in Data Architecture Assessment and Strategy: With deep expertise in data architecture and strategic planning, we evaluate your current and future data requirements to identify the solution that optimizes your data’s potential.

- Vendor-Neutral Recommendations: We provide unbiased guidance tailored to your organization, free from vendor influences. Thus, our recommendations are based specifically on what works best for your goals.

- Proven Experience Across Industries: Having worked with businesses in diverse industries, we understand the nuances of your sector and know how to apply the right data solution.

Implementing a Robust Data Lake for HaulHub Transportation & Construction Company

Here, at SPD Technology, we delivered dozens of projects involving data lakes and data warehouses building. One of the most notable examples was creating a data lake for our major US-based client HaulHub.

Business Challenge

HaulHub turned for our help with data warehousing, when the company needed to manage over 70 million ticketing records and ensure uninterrupted operations with consistent updates in near real-time. One of the biggest problems with their data architecture was scattered data across multiple data sources: files from agencies, contractors, vendors, and suppliers were unstructured and siloed, which hindered the establishment of real-time analytics and made it impossible to perform necessary integrations.

At the same time, the amount of data fed to the system became bigger, and the system could not process it. Therefore, the need for a highly scalable data infrastructure emerged as well.

SPD Technology’s Approach

To solve the pressing data issues, our team designed and implemented a robust data lake capable of ingesting and storing large volumes of unstructured data. This approach provided scalable and flexible management across HaulHub’s entire ecosystem. To ensure seamless data flow, we also developed resilient pipelines that process high data volumes from multiple sources, guaranteeing near real-time availability.

To meet client needs for real-time insights, our data experts built a high-performance OLAP system that integrates both historical and current data, delivering fast and accurate querying capabilities. Additionally, we enhanced the data infrastructure to support real-time processing and visualization, enabling high-speed data delivery essential for operational efficiency. To address the scattered data, we unified disparate data sources into a structured and relational format by leveraging AWS DMS and custom ETL processes.

Results

- Efficient High-Volume Data Management: The data lake and optimized architecture allowed the HAulHub’s platform to process millions of data points in under two seconds.

- Improved Data Visibility and Access: Data-driven decision-making was made easier for stakeholders by providing them with near real-time insights into work zone management, traffic patterns, and material ticketing.

- Enhanced Real-Time Reporting and Analytics: Thanks to the OLAP system and custom dashboards, we helped our client to get fast, accurate insights for more efficient operations and project tracking.

- Future-Proofed Infrastructure: Since we ensured the scalability for HaulHub’s data architecture, the company now can easily grow their data while the system will be able to accommodate data expansion needs without sacrificing performance.

- Streamlined Data Flow Across Stakeholders: With streamlined data integration, each stakeholder is now equipped with customized project views.

Conclusion

Choosing the right solution for storage is crucial for ensuring data quality, compliance and promoting data-driven culture and innovation. Data lakes and data warehouses are common repositories for storing company’s data, but every business needs to choose the one suitable for their objectives. Data lakes are chosen when it is crucial to deal with different data formats to ensure the work with Big Data and ML, while data warehouses are the choice in the case of structured data required for BI, reporting, forecasting, and strict compliance regulations.

Both solutions have their strengths. The benefits of data warehouses include high-quality, accurate, and well-organized data as well as enhanced compliance, transparency, and accountability. The advantages of data lakes are flexibility, scalability, and versatility. The risks that arise once you choose the wrong solution are incompatibility with business objectives, increased costs, complex data integrations, worsen data quality, poor performance, and low flexibility and scalability.

There is also a solution that combines the strong points of both – a lakehouse. However, even though this system seems like a one-size-fits-all repository, it can be overly complex or costly for organizations with straightforward data needs. To implement this solution, it is crucial to define clear data governance standards, implement solid security protocols, use tiered storage, enable real-time data ingestion, establish data catalogs and indexing, optimize data for batch and real-time processing, standardize data formats and schemas, and support interoperability with BI tools and data science platforms.

It might be challenging to understand what can be your ideal data architecture, however, with professional help it becomes easier. So do not hesitate to ask for professional help. You can also contact us, and we will share with you our experience and expertise in leveraging suitable storage solutions.

FAQ

What Is Data Lake vs Data Warehouse?

A data warehouse focuses on structured data with schema-on-write, enabling fast analytics and reporting. A data lake emphasizes storing raw data with schema-on-read, making it suitable for diverse data types and advanced analytics.

Database vs Data Warehouse vs Data Lake: What Is the Difference?

A database handles real-time transactional data, ideal for operational tasks. A data warehouse processes structured data for analytics. A data lake stores raw, large-scale, diverse datasets for big data processing and machine learning.

When to Use Data Lake vs Data Warehouse?

We advise to use a data lake for unstructured data, advanced analytics, and scalability and opt for a data warehouse when structured data, fast querying, and BI reporting are the primary requirements.

How Are Data Marts Different from Data Warehouses?

To compare data mart vs data warehouse, it is important to define that a data mart serves specific departmental needs, containing focused subsets of data, while a data warehouse is organization-wide, integrating diverse datasets for broader analytics and reporting purposes.