How much does poor data quality cost businesses? Gartner puts the average losses caused by poor data quality at $12.9 million a year. A 2023 Monte Carlo survey, in turn, stated that poor data quality impacted 31% of respondents’ revenue.

Look no further than Unity Technology’s fumble in 2022 for a more tangible example. Following bad data ingestion from a third party, its predictive ML algorithms for targeted player acquisition and advertising returned highly inaccurate results. The company lost $110 million from a dip in revenue, model rebuilding costs, and delayed feature deployments.

Let’s discover how to ensure your data quality remains top-notch, from data quality management lifecycle and process to metrics, dimensions, and best practices based on our experience.

Why Managing Data Quality Is Crucial

Robust data quality management allows for ensuring people across the organization can trust the data they access. If this data isn’t up-to-date and consistent, there is a risk of joining the 85% of companies that make incorrect decisions and lose revenue due to stale data.

High-quality data is crucial for more than decision-making, however. It’s also the foundation for all other benefits you can reap with your data. Those include reduced operational costs, enhanced risk management, improved customer experience, and so on.

5 More Value-Adding Reasons to Manage Data Quality

Besides enhancing decision-making across the organization, adopting a strategic approach to data quality management enterprise-wide allows companies to:

- Improve Operational Efficiency: Accurate, timely data will help eliminate bottlenecks and redundancies, streamlining processes and boosting productivity as a result.

- Enhance Risk Management: High-quality data can help identify emerging threats early on and, with the help of advanced analytics, assess their potential impact more accurately.

- Boost Revenue and Profitability: Data-driven strategic decisions can help optimize marketing, sales, and product development to drive profits and revenue. High-performing data organizations are three times more likely to report an EBIT (Earnings Before Interest and Taxes) boost of at least 20% from data and analytics initiatives.

- Optimize Resource Allocation: Accurate data will help you assess resource efficiency and identify how to improve it and eliminate resource waste, reducing margins and improving profitability as a result.

- Power Innovation: Reliable data is the foundation for maximizing the business impact of Big Data and AI/ML. Together with these technologies, it can power new business models and innovative products and services.

What Is Data Quality Management (DQM)?

Data quality management (DQM) means ensuring enterprise data remains consistent, accurate, reliable, and fit for purpose. It involves a variety of practices, technologies, tools, and procedures applied to the ensemble of enterprise data, from master data and historical data to transactional data and Big Data.

Data quality management is part of the overall data management; it’s one of its four key components. (The other three are data security, data access, and data lifecycle management.) Data quality is also a crucial component of the data management framework.

Still, not all data is created equal. Different data types present unique quality challenges. Therefore, they require specific practices and technologies to maintain data quality. So, you have to ensure your DQM strategy addresses those unique challenges with tailored DQM processes and measures to achieve your overarching data management goals.

Data Quality Management Process & Lifecycle

Data quality management is best understood through the DQM lifecycle and the DQM process. Let’s discover what each of them means.

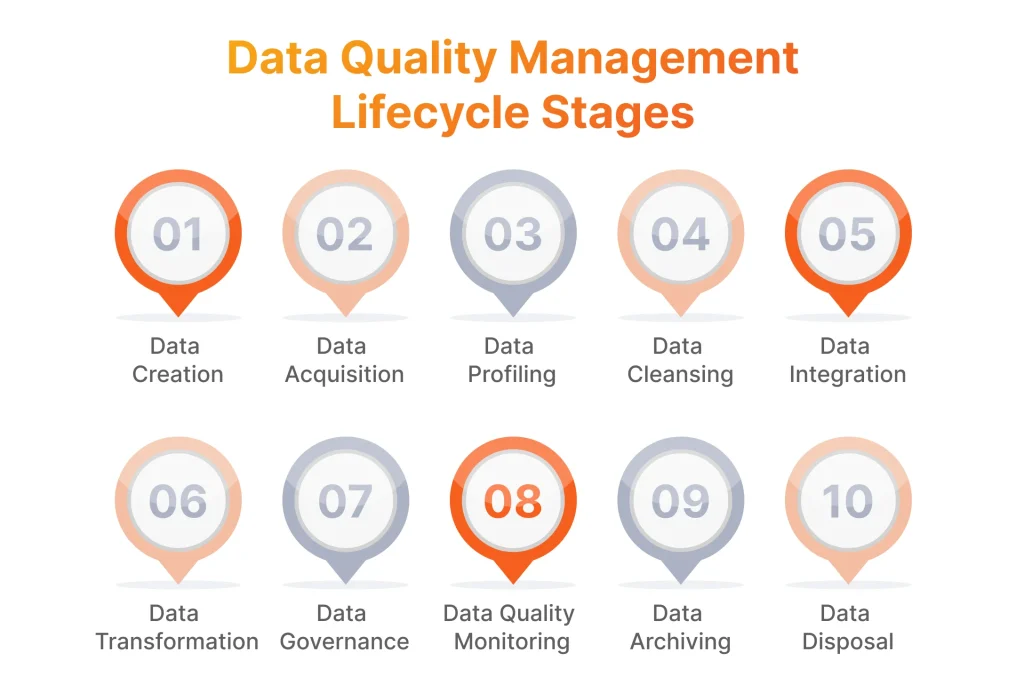

Data quality management (DQM) lifecycle is a broad concept for ensuring data quality across all of its lifecycle stages, from creation to deletion. Keep in mind that it’s not the same as the DQM process (more on that below).

The data quality management lifecycle consists of the following:

- Data Creation: Data is created within the organization via manual data entry or automatic data capture. Failing to ensure data accuracy and relevance at creation will negatively impact the entire DQM lifecycle.

- Data Acquisition: Data is acquired from sources like databases and third-party partners. Similarly to data creation, it’s vital to ensure data quality at acquisition to facilitate DQM at the later stages of the lifecycle.

- Data Profiling: Data is analyzed for quality, completeness, and consistency. Specialized tools identify anomalies, duplicates, and other data quality issues that may undermine data reliability.

- Data Cleansing: Profiled data is manipulated to rectify the revealed inaccuracies and inconsistencies in the process known as data cleansing, cleaning, or scrubbing. This stage involves removing duplicates, filling in missing values, and standardizing formats.

- Data Integration: Cleansed data is consolidated in a unified system to resolve conflicts between datasets and establish a single source of truth.

- Data Transformation: If needed, data is converted into a specific format or aligned with a pre-defined structure while maintaining its integrity and quality. This stage prepares data for particular analytical or operational purposes.

- Data Governance: It involves implementing data management policies and practices like access controls, ownership, and stewardship. They help maintain regulatory compliance and establish accountability for data quality.

- Data Quality Monitoring: Keeping track of data quality metrics lets your team quickly catch and fix data quality issues. It should be an ongoing assessment to maintain data quality, accuracy, and reliability in the long run.

- Data Archiving: Once data is no longer necessary for day-to-day operations, it’s archived to optimize storage and performance. It should remain retrievable and continue to meet data quality standards.

- Data Disposal: Once the data is no longer needed at all, it’s erased. This stage is vital for complying with regulatory requirements, as well as mitigating risks associated with data breaches and unauthorized access.

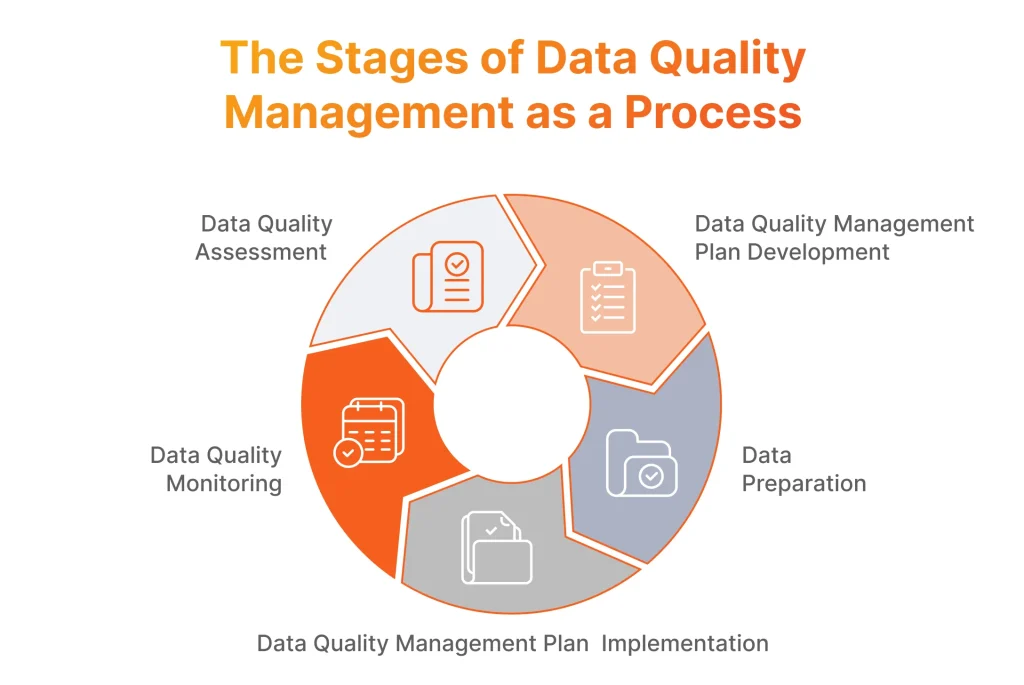

The term ‘DQM lifecycle’ defines all the stages of the data lifecycle, each of which requires specific data quality measures. The DQM process, in contrast, describes those specific measures taken at each stage of the DQM lifecycle.

The DQM process is systematic, continuous, and cyclical. It consists of specific procedures and policies that are applied to data methodically. It’s an ongoing effort that involves continuously improving DQM practices to keep up with changes in data types, sources, or volumes. The DQM process also takes place in iterations to enable you to regularly assess and improve data quality.

It consists of the next phases:

- Current data quality assessment

- Data quality management plan development

- Preparation of the data for the plan’s implementation

- Implementation of the data quality management plan

- Continuous monitoring of data quality.

Data Quality Metrics & Dimensions

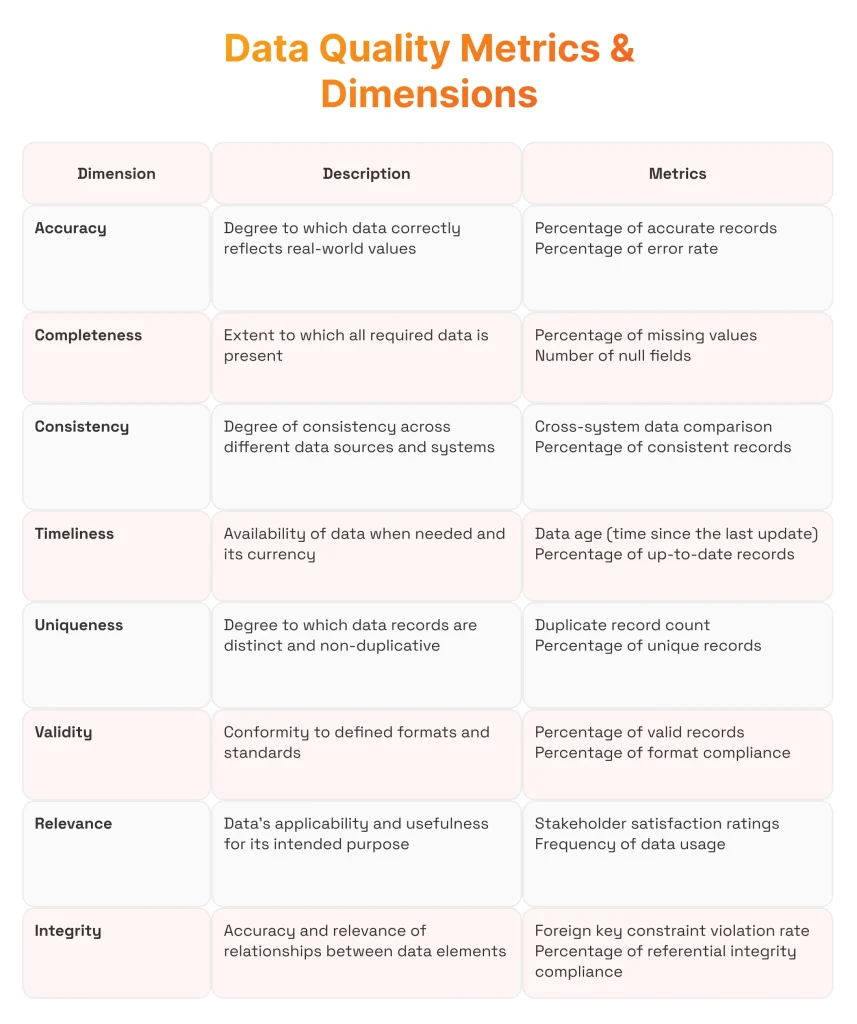

Evaluating and monitoring data quality throughout its lifecycle is impossible without tracking specific metrics across eight data quality dimensions.

Data quality dimensions are specific attributes of data that together determine its quality. Each dimension also comes with particular metrics that reflect the data quality in this specific case.

Dmytro Tymofiiev

Delivery Manager at SPD Technology

“Monitoring and evaluating data quality metrics and dimensions is crucial for getting a full, unbiased understanding of the current data assets’ quality – and improving it. As a structured framework for measuring data quality, they help understand whether data quality standards are maintained within the organization and track data quality improvement or catch its deterioration.”

Data quality is measured across eight dimensions, each with its own metrics.

4 Data Quality Management Best Practices

Building an effective data quality management process is vital for maximizing its ROI, reducing data TCO, and ensuring the data meets the needs of multiple teams and departments. Let’s discover how to develop a robust DQM process by following these four best practices based on our experience.

Define Business-Driven Data Quality Rules

While data quality is crucial for enabling trust in the data itself and analytics output based on it, it’s essentially useless if it doesn’t align with specific business goals and end users’ data needs. For example, if your definition of timeliness contradicts that of business users, they won’t get the latest data when they need it – and so, they won’t be able to perform their duties accordingly.

So, before you define the DQM process, establish the data quality rules that will apply throughout the organization. Involve senior leadership, mid-level executives, and business users across functions to define the business goals that data quality should support. Ensure your DQM process aligns with the overall data strategy, as well.

How We Do It: We conduct workshops and interviews with stakeholders on all levels to zero in on the data quality standards that align with your unique context, needs, and constraints. We also implement automated validation rules across business systems and continuously monitor data quality to adapt the rules and standards to your evolving business objectives.

Perform Root Cause Analysis

According to McKinsey, around 60% of tech executives cite poor data quality as the main roadblock to scaling data solutions. However, identifying the data quality issues you’re facing is far easier than finding the right solution for fixing them permanently.

Dmytro Tymofiiev

Delivery Manager at SPD Technology

“If you focus on fixing the symptoms of data quality issues with temporary patchwork without addressing their root causes, you’ll be only wasting resources – and those errors will continue to persist. That’s why you should invest time and resources into the root cause analysis for each issue.”

To resolve data quality issues once and for all, you need to turn your attention to their root causes. Root cause analysis, as the name suggests, will help you zero in on the root of recurring errors and issues. With the root cause identified, you can invest resources into eliminating it, maximizing resource efficiency as a result.

How We Do It: We collaborate closely with your team to identify data quality problems and their underlying causes. We also rely on data metrics and data lineage to catch the issues and causes that might go unnoticed by your teams. Then, we design and implement targeted solutions to fix those issues once and for all, effectively ensuring data integrity in the long run.

Automate Data Profiling and Cleansing

An average data scientist spends 45% of their time preparing data for use instead of developing and tuning analytics models. This resource waste is completely avoidable, however. Properly selected or developed and implemented automation tools can eliminate the need for manual data profiling and cleansing.

Besides freeing up specialists’ time for more value-added tasks, automating these processes mitigates the risk of human error. As automated processes are uniform and are performed the same way every time, you avoid potential inconsistencies and errors in the datasets – and make sure no issues remain overlooked.

How We Do It: We develop custom automated tools or help select off-the-shelf solutions to streamline data profiling. We also integrate real-time data cleansing workflows into the data pipelines. To maximize your efficiency gains from automated tools, we leverage AI/ML for automation and enable full visibility into data quality with customizable dashboards.

Build a Unified Master Data Set

Master data is the relatively stable enterprise data used for reference across systems and functions. It includes information about customers (e.g., name, phone number), products (e.g., SKUs), suppliers (e.g., supplier ID), locations (e.g., address), and so on.

Building a master data set provides a single source of truth for all essential data across the organization. Consolidating master data ensures core data vital for your business remains consistent, accurate, and up-to-date. For example, if the customer’s phone number changes, marketing, sales, and customer service teams will all have access to the new number, without additional manual data entry.

How We Do It: We implement a robust master data management (MDM) solution to consolidate all master data and ensure its conformity with the established data standards. We also enable ongoing governance and access control tools, as well as policies and technology to uphold master data quality.

3 Data Quality Management Challenges and How We Address Them

While the four best practices above will help you avoid some of the common data quality management pitfalls, there are three persistent challenges we often have to address when providing data management services. Let’s break down what they are and how we counter them.

Dynamic Data Environments

Data volumes are forecasted to increase more than tenfold in a decade, between 2020 and 2030. That increase translates into a higher load on data and analytics infrastructure: two-thirds of IT and analytics expect data volumes to rise by an average of 22% within 12 months.

Add this increase in data volumes to the mounting complexity and variety of data types and sources, and you may end up in a situation where those changes outgrow the established data quality processes.

Dmytro Tymofiiev

Delivery Manager at SPD Technology

“If your DQM doesn’t keep up with the evolution of new data supply, its quality may deteriorate over time, and the costs and complexity of DQM itself can balloon. That’s why you need to design the data quality management process with scalability across data sources, volumes, and types in mind.”

How We Address It: We emphasize scalability when designing and implementing data quality frameworks so that they can accommodate new data sources and types effectively. We also leverage automation tools to ensure your organization can easily monitor and cleanse incoming data no matter its volume. On top of that, we pay particular attention to the importance of data integration across diverse systems and data types.

Data Quality Degradation

Data quality management isn’t a one-and-done project. Over time, even the highest-quality data deteriorates as business processes and external circumstances (e.g., regulatory requirements) evolve. As a result, data may become inaccurate or outdated if you don’t continuously check and improve data quality – and regularly review and fine-tune your DQM processes.

Needless to say, failing to continuously verify and maintain data quality undermines the reliability of analytics insights and may lead to poor decision-making.

How We Address It: We implement continuous monitoring and auditing to catch data degradation in real time and mitigate it. We also introduce data stewardship to maintain consistent data accuracy and relevance in the long run.

Resource Constraints

Even the best-laid-out DQM plans can’t succeed without enough resources to make it a reality. Those resources include talent, funding, and technology. The lack of resources can stem from underestimating the scope of the DQM implementation project or insufficient buy-in on the executive level.

Dmytro Tymofiiev

Delivery Manager at SPD Technology

“Resource allocation should remain continuous as the DQM process itself is unceasing. Once the final steps in the process implementation are complete, you’ll still need the talent and funding to conduct audits and fine-tune data quality management processes, tools, and rules.”

Securing the executive buy-in by communicating the expected ROI is typically the prerequisite to obtaining the funding for DQM. However, minimizing DQM operational costs is also a must for overcoming resource constraints. This can be done by leveraging automation and cloud computing infrastructure benefits.

How We Address It: We help you select the most cost-efficient tools available to power DQM, all while automating as many DQM processes as possible. This frees up your DQM team to focus on value-adding tasks while minimizing running costs.

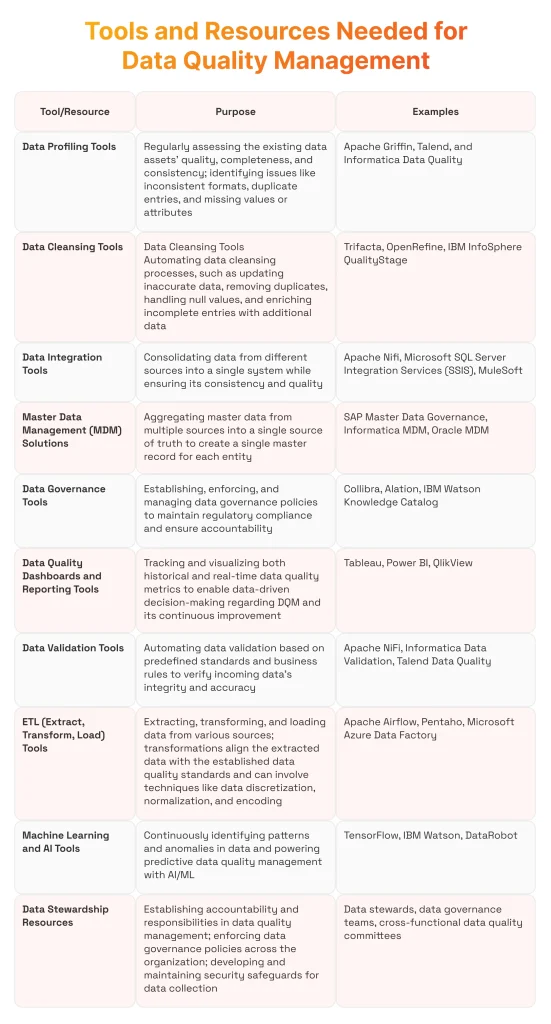

10 Tools and Resources You’ll Need for Data Quality Management

Maintaining data quality in the long run requires specific tools and resources to power data quality management, from data profiling to governance and data quality monitoring.Based on our experience, any strategic data quality management should involve the following tools and resources.

Why Consider Professional Data Quality Management Services?

Getting the data quality management process right from the start will minimize its running costs and maximize its efficiency. At the same time, it’ll ensure you reach your main goal: maintaining data quality standards across the organization both in the immediate future and in the years to come.

However, getting DQM right requires narrow expertise in data science, engineering, integration, and more. Securing it in-house may prove challenging, not to mention costly.

Data quality management is where the advantages of strategic technology consulting are quite pronounced. A professional data management service vendor, after all, has the required technical skills on standby, with specialists ready to take on your project right away. (In contrast, an in-house data scientist role takes 60 days to fill, on average.)

Besides the facilitated access to this valuable technical expertise, turning to a reliable data management partner allows you to reap additional strategic benefits like:

- Cost Optimization: An experienced DQM partner knows exactly which processes can be automated and how, as well as how to prevent common operational inefficiencies to avoid resource waste.

- Futureproof Solutions: A DQM partner will ensure your DQM processes’ scalability as data volumes, types, and sources increase. The partner’s experience in advanced technologies like AI/ML and Big Data will also help lay the groundwork for leveraging them.

- Faster Time-to-Market: Data quality management experts rely on a time-tested methodology, enhanced with best practices and tools, to deliver results as fast as possible, accelerating time-to-market and time-to-value.

Why Choose SPD Technology for Data Quality Management Solutions?

At SPD Technology, we deliver value-adding data management consulting services that help our clients maintain data quality with scalable solutions tailored to their unique challenges and needs. Our end-to-end data management services include both technical and strategic support, from DQM strategy consulting and deployment to training and continuous DQM maintenance and improvement.

To deliver value-driving DQM solutions to our clients, we rely on the following three pillars of success:

- Time-Tested Methodology and Best Practices: We’ve been helping businesses drive growth with software solutions since 2006. Our experience powers our DQM methodology and informs our best practices, allowing us to align your solutions with your unique needs perfectly.

- End-to-End Services: We provide the full range of DQM services to help you design, implement, and maintain your DQM processes. We approach data quality holistically, with consideration for every aspect from data governance and master data management to continuous support and optimization.

- Advanced Technology Expertise: Our expertise spans well beyond data quality management; we’re well-versed in AI/ML, Big Data, cloud computing, and data warehousing tools. This range of expertise allows us to integrate your DQM solutions with advanced technologies efficiently and with minimum disruptions.

Conclusion

Data quality management is the foundation for any benefits you may be striving to gain from your data. Without a comprehensive, professionally designed, and implemented approach to DQM, you’re unlikely to see any improvements in decision-making, operational efficiency, or risk management.

So, while data quality management is just one of the components of enterprise data management, it is perhaps the most crucial one. That’s why you should tackle it only when you’re certain you have the right expertise to design and implement the DQM process in line with your needs and objectives.

If you’re looking for a partner with holistic data quality management expertise, we, at SPD Technology, are at your service. We’ll help you design and implement the processes, policies, and tools to ensure your data remains reliable while optimizing running costs, ensuring scalability, and preventing data quality degradation.

Learn more about our data management services and how they can help you derive the maximum value from your data.

FAQ

What is data quality management?

Data quality management (DQM) is the process of maintaining data quality across the organization. The DQM process is the ensemble of measures applied at each stage of the DQM lifecycle to maintain data quality.

Data quality is measured across eight dimensions: accuracy, completeness, consistency, timeliness, uniqueness, validity, relevance, and integrity. As part of the overall data management process, DQM should address the unique challenges associated with each data type (master data, operational data, etc.).

Why is data quality management important?

Data quality management is vital for ensuring your data remains reliable and trustworthy in the long run. That, in turn, allows you to reap all the other benefits of data and analytics, such as:

- Smarter decision-making

- Improved operational efficiency

- Enhanced risk management

- Boosted profitability and revenue

- Optimized resource allocation

- Facilitated innovation.

What are the components of data quality management?

Data quality management measures can be broken down into the following eight components:

- Data profiling

- Data cleansing and validation

- Data transformation

- Data governance

- Data quality monitoring and continuous improvement

- Data quality rules

- Data quality assessment

- Data issue management.

What are the goals of data quality management?

Data quality management aims to maintain the high quality of enterprise data with a combination of practices, processes, and technology solutions. However, the data quality standards and rules are unique to each organization as they depend on specific business needs and goals.

In general, data quality management is meant to ensure data quality across eight dimensions, each with its own metrics to track: consistency, completeness, accuracy, uniqueness, timeliness, validity, relevance, and integrity.